Showing posts with label movies. Show all posts

Showing posts with label movies. Show all posts

Friday, 5 June 2015

The Zero Theorem: Life in the Void

The Zero Theorem is a film directed by Terry Gilliam (of Brazil and 12 Monkeys fame) that, depending where you live, was released late in 2013 or in 2014. It is set in a surreal version of now and in it we follow the journey of Qohen Leth (played by Christoph Waltz), a reclusive computer genius who "crunches entities" for a generic super corporation, Mancom. The story is a fable, an allegory, and in watching it we are meant to take the issues it raises as existential ones.

Qohen Leth has a problem. Some years ago he took a phone call and that call was going to tell him what the meaning of existence was. But he got so excited at the prospect that he dropped the phone. When he picked it up his caller was gone. Ever since he has been waiting for a call back. But the call back never comes. So day by day he faces an existential struggle because he desperately does want to know what the meaning of life is. His life, you see, is dominated by a vision of a giant black hole into which all things inevitably go. His work life is shown to be much like everyone else's in this parody of our world. People are "tools" and work is a meaningless task serving only to enrich those far above their pay grade. Workers are replaceable cogs who must be pushed as hard as possible to achieve maximum productivity. Their value is in their productivity.

This world is run by corporations and the one that stands in for them all in the film is Mancom. Mancom have a special task for Qohen. They want him to work on an equation proving that "Everything adds up to nothing." That is, they want him to prove that existence is meaningless. Why do they want him to do this? Because, as the head of Mancom says in the film, in a meaningless universe of chaos there would be money to be made selling order. The point seems to be that commercial enterprises can make money from meaninglessness by providing any number of distractions or things to fill the whole at the centre of Being.

The film paints a picture of a world full of personalized advertizing that is thrust at you from all angles. Everywhere there are screens that are either thrusting something into your face or serving as conduits to an online escape world where you can create a new you and escape the existential questions of existence that the real world thrusts upon you. There is a scene in which people are at a party but, instead of interacting with each other, they all dance around looking into tablets whilst wearing headphones. Further to this, there are cameras all around. If it's not the ones we are using to broadcast ourselves into a cyber world, it's the ones our bosses are using to watch us at work or the ones in the street that can recognise us and beam personalized advertizements straight at us as we walk. This is the surveillance state for company profit that records and archives our existence.

And what of the people in this place? Most of them seem to be infantilized, lacking of any genuine ambition and placated by the "bread and circuses". Their lives are a mixture of apathy and misdirection. They seek meaning in screens with virtual friends or in virtual worlds and, presumably, a lot of them take advantage of the constant advertizements they are bombarded with. When Qohen has something of a crisis early on in the film "Management" send along Bainsley to his house (Qohen doesn't like going out or being touched and so he negotiates to work from home). Bainsley, unbeknownst to Qohen, is a sex worker in the employ of Mancom. She is sent along as stress relief (so that this malfunctioning "tool" can be got back to productive work) and inveigles him into a virtual reality sex site which, in this case, has been tailored to Qohen's specific needs. (This is to say it is enticing but not overtly sexual to give the game away. In essence, Bainsley becomes his sexy friend.) Other characters drop hints that Bainsley is just another tool but Qohen doesn't want to accept it. She is becoming something that might actually have meaning for him. But then, one day, Qohen goes back to the site and, in error, the truth of who Bainsley is is revealed and all his trust in this potential meaning evaporates. (One wonders how many people are online at pornography sites filling the meaning-shaped hole by trying to find or foster such fake attachments?)

So what are we to make of this in our Google-ified, Facebooked, Game of Thrones watching, Angry Birds playing, online pornography soaked, world of Tweeters and Instagrammers? I find it notable that Terry Gilliam says his film is about OUR world and not a future dystopia. And I agree with him. The trouble is I can sense a lot of people are probably shrugging and/or sighing now. This kind of point is often made and often apathetically agreed with with a casual nod of the head. But not many people ever really seem to care. Why should we really care if hundreds of millions of us have willingly handed over the keys to our lives to a few super corporations who provide certain services to us - but only on the basis we give them our identities and start to fill up their servers with not just the details of our lives but the content of them as well? The technologization of our lives and the provision of a connectedness that interferes with face to face connectedness seems to be something no one really cares about. Life through a screen, or a succession of screens, is now a reality for an increasing number of people. In the UK there is a TV show called "Gogglebox" (which I've never watched) but no one ever seems to realise that they might be the ones who are spending their lives goggling.

So let's try and take off the rose-tinted specs and see things as they are once all the screens go black and all that's reflected at us are our real world faces and our real world lives. I wonder, what does life offer you? Thinking realistically, what ambitions do you have? (I don't mean some dumb bucket list here.) When you look at life without any products or games or TV shows or movies or online role playing games or social media to fill it with, when you throw away your iPhone and your iWatch, your Google Glass, and all your online identities, where is the meaning in your life to be found? When you look at life as it extends from your school days, through your working life to inevitable old age (if you are "lucky", of course), what meaning does that hold for you? Would you agree that this timeline is essentially banal, an existence which, by itself, is quite mechanical? Have you ever asked yourself what the point of this all is? Have you ever tried to fit the point of your life into a larger narrative? Do you look at life and see a lot of people who don't know what they are doing, or what for, allowing themselves to be taken through life on a conveyor belt, entertained as they pass through by Simon Cowell and Ant and Dec? Do you sometimes think that life is just a succession of disparate experiences with little or no lasting significance?

The Zero Theorem is essentially a film about the meaning of life. Gilliam, of course, made another film that was actually called The Meaning of Life with the rest of his Monty Python colleagues. Now you might be wondering why the question is even raised. Perhaps, for you, life has no meaning and that's not very controversial. You shrug off all my questions as not really very important. But I would reply to that person by asking them if meaning has no meaning. For, put simply, there isn't a person alive that doesn't want something to mean something. Human beings just do need meaning in their lives. So Qohen Leth, for me, functions as an "Everyman" in this story. For we all want to know what things mean. And, without giving away the ending of the film, I think that, in the end, we all have to face up to the twin questions of meaning itself and of things meaning nothing. We all have to address the question that values devalue themselves, that meanings are just things that we give and that nothing, as Qohen hoped for, was given from above, set in stone, a god before which we could bow and feel safe that order was secured.

For order is not secured. Some people might try to sell it to you. (In truth, many companies are trying to right now.) Others might try to convince you that they've got the meaning and order you need in your life and you can have it too. But they haven't and you can't. That black hole that Qohen Leth keeps seeing is out there and everything goes into it. Our lives are lived in the void. The question then becomes can you find meaning and purpose in the here and now, in the experience of living your life, or will you just pass through empty and confused, or perhaps hoping that someone else can come along and provide you with meaning without you having to do any work? Who takes responsibility for finding that meaning? Is it someone else, as Qohen Leth with his phone call hoped, or is it you?

The question of meaning is, in the end, one that never goes away for any of us. Not whilst you're alive anyway.

Labels:

being,

existence,

existentialism,

films,

human,

humanity,

meaning,

movies,

nihilism,

ontology,

philosophy,

The Zero Theorem

Friday, 20 March 2015

Would You Worry About Robots That Had Free Will?

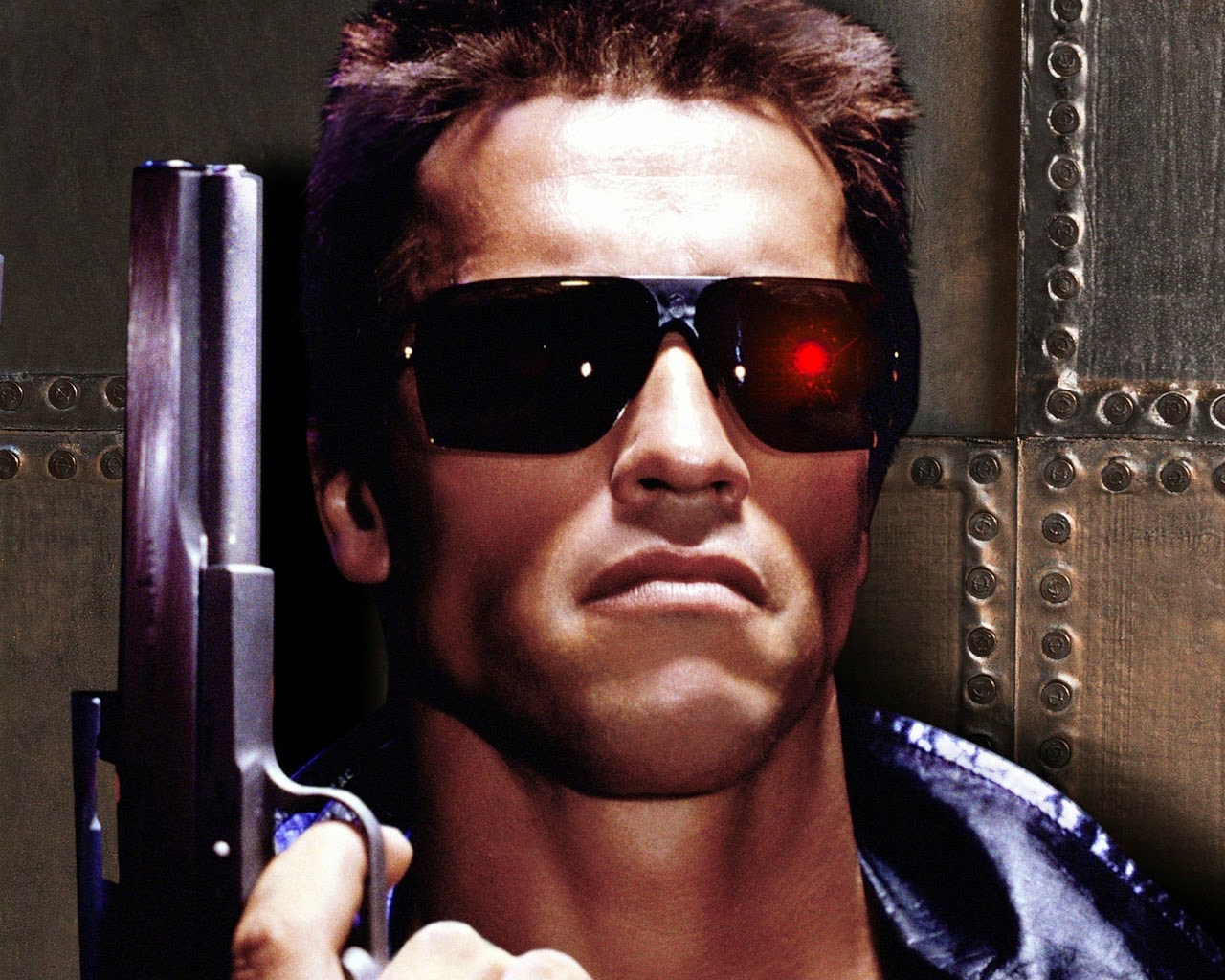

Its perhaps a scary thought, a very scary thought: an intelligent robot with free will, one making up the rules for itself as it goes along. Think Terminator, right? Or maybe the gunfighter character Yul Brynner plays in "Westworld", a defective robot that turns from being a fairground attraction into a super intelligent robot on a mission to kill you? But, if you think about it, is it really as scary as it seems? After all, you live in a world full of 7 billion humans and they (mostly) have free will as well. Are you huddled in a corner, scared to go outside, because of that? Then why would intelligent robots with free will be any more frightening? What are your unspoken assumptions here that drive your decision to regard such robots as either terrifying or no worse than the current situation we find ourselves in? I suggest our thinking here is guided by our general thinking about robots and about free will. It may be that, in both cases, a little reflection clarifies our thinking once you dig a little under the surface.

Take "free will" for example. It is customary to regard free will as the freedom to act on your own recognisance without coercion or pressure from outside sources in any sense. But, when you think about it, free will is not free in any absolute sense at all. Besides the everyday circumstances of your life, which directly affect the choices you can make, there is also your genetic make up to consider. This affects the choices you can make too because it is responsible not just for who you are but who you can be. In short, there is both nature and nurture acting upon you at all times. What's more, you are one tiny piece of a chain of events, a stream of consciousness if you will, that you don't control. Some people would even suggest that things happen the way they do because they have to. Others, who believe in a multiverse, suggest that everything that can possibly happen is happening right now in a billion different versions of all possible worlds. Whether you believe that or not, the point is made that so much more happens in the world every day that you don't control than the tiny amount of things that you do.

And then we turn to robots. Robots are artificial creations. I've recently watched a number of films which toy with the fantasy that robots could become alive. As Number 5 in the film Short Circuit says, "I'm alive!". As creations, robots have a creator. They rely on the creator's programming to function. This programming delimits all the possibilities for the robot concerned. But there is a stumbling block. This stumbling block is called "artificial intelligence". Artificial Intelligence, or AI, is like putting a brain inside a robot (a computer in effect) which can learn and adapt in ways analogous to the human mind. This, it is hoped, allows the robot to begin making its own choices, developing its own thought patterns and ways of choosing. It gives the robot the ability to reason. It is a very moot point, for me at least, whether this would constitute the robot as being alive, as having a consciousness or as being self-aware. And would a robot that could reason through AI therefore have free will? Would that depend on the programmer or could such a robot "transcend its programming"?

Well, as I've already suggested, human free will it not really free. Human free will is constrained by many factors. But we can still call it free because it is the only sort of free will we could ever have anyway. Human beings are fallible and contingent beings. They are not gods and cannot stand outside the stream of events to get a view that wasn't a result of them or that will not have consequences further on down the line for them. So, in this respect, we could not say that a robot couldn't have free will because it would be reliant on programming or constrained by outside things - because all free will is constrained anyway. Discussing the various types of constraint and their impact is another discussion though. Here it is enough to point out that free will isn't free whether you are a human or an intelligent robot. Being programmed could act as the very constraint which makes robot free will possible, in fact.

It occurs to me as I write out this blog that one difference between humans and robots is culture. Humans have culture and even many micro-cultures and these greatly influence human thinking and action. Robots, on the other hand, have no culture because these things rely on sociability and being able to think and feel for yourself. Being able to reason, compare and pass imaginative, artistic judgments are part of this too. Again, in the film Short Circuit, the scientist portrayed by actor Steve Guttenberg refuses to believe that Number 5 is alive and so he tries to trick him. He gives him a piece of paper with writing on it and a red smudge along the fold of the paper. He asks the robot to describe it. Number 5 begins by being very unimaginative and precise, describing the paper's chemical composition and things like this. The scientist laughs, thinking he has caught the robot out. But then Number 5 begins to describe the red smudge, saying it looks like a butterfly or a flower and flights of artistic fancy take over. The scientist becomes convinced that Number 5 is alive. I do not know if robots will ever be created that can think artistically or judge which of two things looks more beautiful than the other but I know that human beings can. And this common bond with other people that forms into culture is yet another background which free will needs in order to operate.

I do not think that there is any more reason to worry about a robot that would have free will than there is to worry about a person that has free will. It is not freedom to do anything that is scary anyway because that freedom never really exists. All choices are made against the backgrounds that make us and shape us in endless connections we could never count or quantify. And, what's more, our thinking is not so much done by us in a deliberative way as it is simply a part of our make up anyway. In this respect we act, perhaps, more like a computer in that we think and calculate just because that is what, once "switched on" with life, we will do. "More input!" as Number 5 said in Short Circuit. This is why we talk of thought occuring to us rather than us having to sit down and deliberate to produce thoughts in the first place. Indeed, it is still a mystery exactly how these things happen at all but we can say that thoughts just occur to us (without us seemingly doing anything but being a normal, living human being) as much, if not more, than that we sit down and deliberately create them. We breathe without thinking "I need to breathe" and we think without thinking "I need to think".

So, all my thinking these past few days about robots has, with nearly every thought I've had, forced me into thinking ever more closely about what it is to be human. I imagine the robot CHAPPiE, from the film of the same name, going from a machine made to look vaguely human to having that consciousness.dat program loaded into its memory for the first time. I imagine consciousness flooding the circuitry and I imagine that as a human. One minute you are nothing and the next this massive rush of awareness floods your consciousness, a thing you didn't even have a second before. To be honest, I am not sure how anything could survive that rush of consciousness. It is just such an overwhelmingly profound thing. I try to imagine my first moments as a baby emerging into the world. Of course, I can't remember what it was like. But I understand most babies cry and that makes sense to me. In CHAPPiE the robot is played as a child on the basis, I suppose, of human analogy. But imagine you had just been given consciousness for the first time, and assume you manage to get over that hurdle of being able to deal with the initial rush: how would you grow and develop then? What would your experience be like? Would the self-awareness be overpowering? (As someone who suffers from mental illness my self-awareness at times can be totally debilitating.) We traditionally protect children and educate them, recognising that they need time to grow into their skins, as it were. Would a robot be any different?

My thinking about robots has led to lots of questions and few answers. I write these blogs not as any kind of expert but merely as a thoughtful person. I think one conclusion I have reached is that what separates humans from all other beings, natural or artificial, at this point is SELF AWARENESS. Maybe you would also call this consciousness too. I'm not yet sure how we could meaningfully talk of an artificially intelligent robot having self-awareness. That's one that will require more thought. But we know, or at least assume, that we are the only natural animal on this planet, or even in the universe that we are aware of, that knows it is alive. Dogs don't know they are alive. Neither do whales, flies, fish, etc. But we do. And being self-aware and having a consciousness, being reasoning beings, is a lot of what makes us human. In the film AI, directed by Steven Spielberg, the opening scene shows the holy grail of robot builders to be a robot that can love. I wonder about this though. I like dogs and I've been privileged to own a few. I've cuddled and snuggled with them and that feels very like love. But, of course, our problem in all these things is that we are human. We are anthropocentric. We see with human eyes. This, indeed, is our limitation. And so we interpret the actions of animals in human ways. Can animals love? I don't know. But it looks a bit like it. In some of the robot films I have watched the characters develop affection for variously convincing humanoid-shaped lumps of metal. I found that more difficult to swallow. But we are primed to recognise and respond to cuteness. Why do you think the Internet is full of cat pictures? So the question remains: could we build an intelligent robot that could mimic all the triggers in our very human minds, that could convince us it was alive, self-aware, conscious? After all, it wouldn't need to actually BE any of these things. It would just need to get us to respond AS IF IT WAS!

My next blog will ask: Are human beings robots?

With this blog I'm releasing an album of music made as I thought about intelligent robots and used that to help me think about human beings. It's called ROBOT and its part 8 of my Human/Being series of albums. You can listen to it HERE!

Take "free will" for example. It is customary to regard free will as the freedom to act on your own recognisance without coercion or pressure from outside sources in any sense. But, when you think about it, free will is not free in any absolute sense at all. Besides the everyday circumstances of your life, which directly affect the choices you can make, there is also your genetic make up to consider. This affects the choices you can make too because it is responsible not just for who you are but who you can be. In short, there is both nature and nurture acting upon you at all times. What's more, you are one tiny piece of a chain of events, a stream of consciousness if you will, that you don't control. Some people would even suggest that things happen the way they do because they have to. Others, who believe in a multiverse, suggest that everything that can possibly happen is happening right now in a billion different versions of all possible worlds. Whether you believe that or not, the point is made that so much more happens in the world every day that you don't control than the tiny amount of things that you do.

And then we turn to robots. Robots are artificial creations. I've recently watched a number of films which toy with the fantasy that robots could become alive. As Number 5 in the film Short Circuit says, "I'm alive!". As creations, robots have a creator. They rely on the creator's programming to function. This programming delimits all the possibilities for the robot concerned. But there is a stumbling block. This stumbling block is called "artificial intelligence". Artificial Intelligence, or AI, is like putting a brain inside a robot (a computer in effect) which can learn and adapt in ways analogous to the human mind. This, it is hoped, allows the robot to begin making its own choices, developing its own thought patterns and ways of choosing. It gives the robot the ability to reason. It is a very moot point, for me at least, whether this would constitute the robot as being alive, as having a consciousness or as being self-aware. And would a robot that could reason through AI therefore have free will? Would that depend on the programmer or could such a robot "transcend its programming"?

Well, as I've already suggested, human free will it not really free. Human free will is constrained by many factors. But we can still call it free because it is the only sort of free will we could ever have anyway. Human beings are fallible and contingent beings. They are not gods and cannot stand outside the stream of events to get a view that wasn't a result of them or that will not have consequences further on down the line for them. So, in this respect, we could not say that a robot couldn't have free will because it would be reliant on programming or constrained by outside things - because all free will is constrained anyway. Discussing the various types of constraint and their impact is another discussion though. Here it is enough to point out that free will isn't free whether you are a human or an intelligent robot. Being programmed could act as the very constraint which makes robot free will possible, in fact.

It occurs to me as I write out this blog that one difference between humans and robots is culture. Humans have culture and even many micro-cultures and these greatly influence human thinking and action. Robots, on the other hand, have no culture because these things rely on sociability and being able to think and feel for yourself. Being able to reason, compare and pass imaginative, artistic judgments are part of this too. Again, in the film Short Circuit, the scientist portrayed by actor Steve Guttenberg refuses to believe that Number 5 is alive and so he tries to trick him. He gives him a piece of paper with writing on it and a red smudge along the fold of the paper. He asks the robot to describe it. Number 5 begins by being very unimaginative and precise, describing the paper's chemical composition and things like this. The scientist laughs, thinking he has caught the robot out. But then Number 5 begins to describe the red smudge, saying it looks like a butterfly or a flower and flights of artistic fancy take over. The scientist becomes convinced that Number 5 is alive. I do not know if robots will ever be created that can think artistically or judge which of two things looks more beautiful than the other but I know that human beings can. And this common bond with other people that forms into culture is yet another background which free will needs in order to operate.

I do not think that there is any more reason to worry about a robot that would have free will than there is to worry about a person that has free will. It is not freedom to do anything that is scary anyway because that freedom never really exists. All choices are made against the backgrounds that make us and shape us in endless connections we could never count or quantify. And, what's more, our thinking is not so much done by us in a deliberative way as it is simply a part of our make up anyway. In this respect we act, perhaps, more like a computer in that we think and calculate just because that is what, once "switched on" with life, we will do. "More input!" as Number 5 said in Short Circuit. This is why we talk of thought occuring to us rather than us having to sit down and deliberate to produce thoughts in the first place. Indeed, it is still a mystery exactly how these things happen at all but we can say that thoughts just occur to us (without us seemingly doing anything but being a normal, living human being) as much, if not more, than that we sit down and deliberately create them. We breathe without thinking "I need to breathe" and we think without thinking "I need to think".

So, all my thinking these past few days about robots has, with nearly every thought I've had, forced me into thinking ever more closely about what it is to be human. I imagine the robot CHAPPiE, from the film of the same name, going from a machine made to look vaguely human to having that consciousness.dat program loaded into its memory for the first time. I imagine consciousness flooding the circuitry and I imagine that as a human. One minute you are nothing and the next this massive rush of awareness floods your consciousness, a thing you didn't even have a second before. To be honest, I am not sure how anything could survive that rush of consciousness. It is just such an overwhelmingly profound thing. I try to imagine my first moments as a baby emerging into the world. Of course, I can't remember what it was like. But I understand most babies cry and that makes sense to me. In CHAPPiE the robot is played as a child on the basis, I suppose, of human analogy. But imagine you had just been given consciousness for the first time, and assume you manage to get over that hurdle of being able to deal with the initial rush: how would you grow and develop then? What would your experience be like? Would the self-awareness be overpowering? (As someone who suffers from mental illness my self-awareness at times can be totally debilitating.) We traditionally protect children and educate them, recognising that they need time to grow into their skins, as it were. Would a robot be any different?

My thinking about robots has led to lots of questions and few answers. I write these blogs not as any kind of expert but merely as a thoughtful person. I think one conclusion I have reached is that what separates humans from all other beings, natural or artificial, at this point is SELF AWARENESS. Maybe you would also call this consciousness too. I'm not yet sure how we could meaningfully talk of an artificially intelligent robot having self-awareness. That's one that will require more thought. But we know, or at least assume, that we are the only natural animal on this planet, or even in the universe that we are aware of, that knows it is alive. Dogs don't know they are alive. Neither do whales, flies, fish, etc. But we do. And being self-aware and having a consciousness, being reasoning beings, is a lot of what makes us human. In the film AI, directed by Steven Spielberg, the opening scene shows the holy grail of robot builders to be a robot that can love. I wonder about this though. I like dogs and I've been privileged to own a few. I've cuddled and snuggled with them and that feels very like love. But, of course, our problem in all these things is that we are human. We are anthropocentric. We see with human eyes. This, indeed, is our limitation. And so we interpret the actions of animals in human ways. Can animals love? I don't know. But it looks a bit like it. In some of the robot films I have watched the characters develop affection for variously convincing humanoid-shaped lumps of metal. I found that more difficult to swallow. But we are primed to recognise and respond to cuteness. Why do you think the Internet is full of cat pictures? So the question remains: could we build an intelligent robot that could mimic all the triggers in our very human minds, that could convince us it was alive, self-aware, conscious? After all, it wouldn't need to actually BE any of these things. It would just need to get us to respond AS IF IT WAS!

My next blog will ask: Are human beings robots?

With this blog I'm releasing an album of music made as I thought about intelligent robots and used that to help me think about human beings. It's called ROBOT and its part 8 of my Human/Being series of albums. You can listen to it HERE!

Labels:

AI,

artificial intelligence,

being,

Chappie,

existence,

existentialism,

films,

life,

movies,

personhood,

philosophy,

robots,

science fiction

Wednesday, 18 March 2015

Humans and Robots: Are They So Different?

Today I have watched the film "Chappie" from Neill Blomkamp, the South African director who also gave us District 9 and Elysium. Without going into too much detail on a film only released for two weeks, its a film about a military robot which gets damaged and is sent to be destroyed but is saved at the last moment when its whizzkid inventor saves it to try out his new AI program on it (consciousness.dat). What we get is a robot that becomes self-aware and develops a sense of personhood. For example, it realises that things, and it, can die (in its case when its battery runs out).

Of course, the idea of robot beings is not new. It is liberally salted throughout the history of the science fiction canon. So whether you want to talk about Terminators (The Terminator), Daleks (Doctor Who), Replicants (Bladerunner) or Transformers (Transformers), the idea that things that are mechanical or technological can think and feel like us (and sometimes not like us or "better" than us) is not a new one. Within another six weeks we will get another as the latest Avengers film is based around fighting the powerful AI robot, Ultron.

Watching Chappie raised a lot of issues for me. You will know if you have been following this blog or my music recently that questions of what it is to be human or to have "being" have been occupying my mind. Chappie is a film which deliberately interweaves such questions into its narrative and we are expressly meant to ask ourselves how we should regard this character as we watch the film, especially as various things happen to him or as he has various decisions to make. Is he a machine or is he becoming a person? What's the difference between those two? The ending to the film, which I won't give away here, leads to lots more questions about what it is that makes a being alive and what makes beings worthy of respect. These are very important questions which lead into all sorts of other areas such as human and animal rights and more philosophical questions such "what is it to be a person"? Can something made entirely of metal be a person? If not, then are we saying that only things made of flesh and bone can have personhood?

I can't but be fascinated by these things. For example, the film raises the question of if a consciousness could be transferred from one place to another. Would you still be the same "person" in that case? That, in turn, leads you to ask what a person is. Is it reducible to a "consciousness"? Aren't beings more than brain or energy patterns? Aren't beings actually physical things too (even a singular unity of components) and doesn't it matter which physical thing you are as to whether you are you or not? Aren't you, as a person, tied to your particular body as well? The mind or consciousness is not an independent thing free of all physical restraints. Each one is unique to its physical host. This idea comes to the fore once we start comparing robots, deliberately created and programmed entities, usually on a common template, with people. The analogy is often made in both directions so that people are seen as highly complicated computer programs and robots are seen as things striving to be something like us - especially when AI enters the equation. But could a robot powered by AI ever actually be "like a human"? Are robots and humans just less and more complicated versions of the same thing or is the analogy only good at the linguistic level, something not to be pushed further than this?

Besides raising philosophical questions of this kind its also a minefield of language. Chappie would be a him - indicating a person. Characters like Bumblebee in Transformers or Roy Batty in Bladerunner are also regarded as living beings worthy of dignity, life and respect. And yet these are all just more or less complicated forms of machine. They are metal and circuitry. Their emotions are programs. They are responding, all be it in very complicated ways, as they are programmed to respond. And yet we use human language of them and the film-makers try to trick us into having human emotions about them and seeing them "as people". But none of these things are people. Its a machine. What does it matter if we destroy it. We can't "kill" it because it was never really "alive", right? An "on/off" switch is not the same thing as being dead, surely? When Chappie talks about "dying" in the film it is because the military robot he is has a battery life of 5 days. He equates running out of power with being dead. If you were a self-aware machine I suppose this would very much be an existential issue for you. (Roy Batty, of course, is doing what he does in Bladerunner because replicants have a hard-wired lifespan of 4 years.) But then turn it the other way. Aren't human beings biological machines that need fuel to turn into energy so that they can function? Isn't that really just the same thing?

There are just so many questions here. Here's one: What is a person? The question matters because we treat things we regard as like us differently to things that we don't. Animal Rights people think that we should protect animals from harm and abuse because in a number of cases we suggest they can think and feel in ways analogous to ours. Some would say that if something can feel pain then it should be protected from having to suffer it. That seems to be "programmed in" to us. We have an impulse against letting things be hurt and a protecting instinct. And yet there is something here that we are forgetting about human beings that sets them apart from both animals and most intelligent robots as science fiction portrays them. This is that human beings can deliberately do things that will harm them. Human beings can set out to do things that are dangerous to themselves. Now animals, most would say, are not capable of doing either good or bad because we do not judge them self-aware enough to have a conscience and so be capable of moral judgment or of weighing up good and bad choices. We do not credit them with the intelligence to make intelligent, reasoned decisions. Most robots or AI's that have been thought of always have protocols about not only protecting themselves from harm but (usually) humans too as well. Thus, we often get the "programming gone wrong" stories where robots become killing machines. But the point there is that that was never the intention when these things were made.

So human beings are not like either animals or artificial lifeforms in this respect because, to be blunt, human beings can be stupid. They can harm themselves, they can make bad choices. And that seems to be an irreducible part of being a human being: the capacity for stupidity. But humans are also individuals. We differentiate ourselves one from another and value greatly that separation. How different would one robot with AI be from another, identical, robot with an identical AI? Its a question to think about. How about if you could collect up all that you are in your mind, your consciousness, thoughts, feelings, memories, and transfer them to a new body, one that would be much more long lasting. Would you still be you or would something irreducible about you have been taken away? Would you actually have been changed and, if so, so what? This question is very pertinent to me as I suffer from mental illness which, more and more as it is studied, is coming to be seen as having hereditary components. My mother, too, suffers from a similar thing as does her twin sister. It also seems as if my brother's son might be developing a similar thing too. So the body I have, and the DNA that makes it up, is something very personal to me. It makes me who I am and has quite literally shaped my experience of life and my sense of identity. Changing my body would quite literally make me a different person, one without certain genetic or biological components. Wouldn't it?

So many questions. But this is only my initial thoughts on the subject and I'm sure they will be on-going. So you can expect that I will return to this theme again soon. Thanks for reading!

You can hear my music made whilst I was thinking about what it is to be human here!

Of course, the idea of robot beings is not new. It is liberally salted throughout the history of the science fiction canon. So whether you want to talk about Terminators (The Terminator), Daleks (Doctor Who), Replicants (Bladerunner) or Transformers (Transformers), the idea that things that are mechanical or technological can think and feel like us (and sometimes not like us or "better" than us) is not a new one. Within another six weeks we will get another as the latest Avengers film is based around fighting the powerful AI robot, Ultron.

Watching Chappie raised a lot of issues for me. You will know if you have been following this blog or my music recently that questions of what it is to be human or to have "being" have been occupying my mind. Chappie is a film which deliberately interweaves such questions into its narrative and we are expressly meant to ask ourselves how we should regard this character as we watch the film, especially as various things happen to him or as he has various decisions to make. Is he a machine or is he becoming a person? What's the difference between those two? The ending to the film, which I won't give away here, leads to lots more questions about what it is that makes a being alive and what makes beings worthy of respect. These are very important questions which lead into all sorts of other areas such as human and animal rights and more philosophical questions such "what is it to be a person"? Can something made entirely of metal be a person? If not, then are we saying that only things made of flesh and bone can have personhood?

I can't but be fascinated by these things. For example, the film raises the question of if a consciousness could be transferred from one place to another. Would you still be the same "person" in that case? That, in turn, leads you to ask what a person is. Is it reducible to a "consciousness"? Aren't beings more than brain or energy patterns? Aren't beings actually physical things too (even a singular unity of components) and doesn't it matter which physical thing you are as to whether you are you or not? Aren't you, as a person, tied to your particular body as well? The mind or consciousness is not an independent thing free of all physical restraints. Each one is unique to its physical host. This idea comes to the fore once we start comparing robots, deliberately created and programmed entities, usually on a common template, with people. The analogy is often made in both directions so that people are seen as highly complicated computer programs and robots are seen as things striving to be something like us - especially when AI enters the equation. But could a robot powered by AI ever actually be "like a human"? Are robots and humans just less and more complicated versions of the same thing or is the analogy only good at the linguistic level, something not to be pushed further than this?

Besides raising philosophical questions of this kind its also a minefield of language. Chappie would be a him - indicating a person. Characters like Bumblebee in Transformers or Roy Batty in Bladerunner are also regarded as living beings worthy of dignity, life and respect. And yet these are all just more or less complicated forms of machine. They are metal and circuitry. Their emotions are programs. They are responding, all be it in very complicated ways, as they are programmed to respond. And yet we use human language of them and the film-makers try to trick us into having human emotions about them and seeing them "as people". But none of these things are people. Its a machine. What does it matter if we destroy it. We can't "kill" it because it was never really "alive", right? An "on/off" switch is not the same thing as being dead, surely? When Chappie talks about "dying" in the film it is because the military robot he is has a battery life of 5 days. He equates running out of power with being dead. If you were a self-aware machine I suppose this would very much be an existential issue for you. (Roy Batty, of course, is doing what he does in Bladerunner because replicants have a hard-wired lifespan of 4 years.) But then turn it the other way. Aren't human beings biological machines that need fuel to turn into energy so that they can function? Isn't that really just the same thing?

There are just so many questions here. Here's one: What is a person? The question matters because we treat things we regard as like us differently to things that we don't. Animal Rights people think that we should protect animals from harm and abuse because in a number of cases we suggest they can think and feel in ways analogous to ours. Some would say that if something can feel pain then it should be protected from having to suffer it. That seems to be "programmed in" to us. We have an impulse against letting things be hurt and a protecting instinct. And yet there is something here that we are forgetting about human beings that sets them apart from both animals and most intelligent robots as science fiction portrays them. This is that human beings can deliberately do things that will harm them. Human beings can set out to do things that are dangerous to themselves. Now animals, most would say, are not capable of doing either good or bad because we do not judge them self-aware enough to have a conscience and so be capable of moral judgment or of weighing up good and bad choices. We do not credit them with the intelligence to make intelligent, reasoned decisions. Most robots or AI's that have been thought of always have protocols about not only protecting themselves from harm but (usually) humans too as well. Thus, we often get the "programming gone wrong" stories where robots become killing machines. But the point there is that that was never the intention when these things were made.

So human beings are not like either animals or artificial lifeforms in this respect because, to be blunt, human beings can be stupid. They can harm themselves, they can make bad choices. And that seems to be an irreducible part of being a human being: the capacity for stupidity. But humans are also individuals. We differentiate ourselves one from another and value greatly that separation. How different would one robot with AI be from another, identical, robot with an identical AI? Its a question to think about. How about if you could collect up all that you are in your mind, your consciousness, thoughts, feelings, memories, and transfer them to a new body, one that would be much more long lasting. Would you still be you or would something irreducible about you have been taken away? Would you actually have been changed and, if so, so what? This question is very pertinent to me as I suffer from mental illness which, more and more as it is studied, is coming to be seen as having hereditary components. My mother, too, suffers from a similar thing as does her twin sister. It also seems as if my brother's son might be developing a similar thing too. So the body I have, and the DNA that makes it up, is something very personal to me. It makes me who I am and has quite literally shaped my experience of life and my sense of identity. Changing my body would quite literally make me a different person, one without certain genetic or biological components. Wouldn't it?

So many questions. But this is only my initial thoughts on the subject and I'm sure they will be on-going. So you can expect that I will return to this theme again soon. Thanks for reading!

You can hear my music made whilst I was thinking about what it is to be human here!

Labels:

being,

Bladerunner,

Chappie,

Daleks,

death,

Doctor Who,

existence,

existentialism,

films,

human,

humanity,

meaning,

movies,

personhood,

philosophy,

science fiction,

Transformers,

Ultron

Subscribe to:

Posts (Atom)